(A Next-Generation Smart Factory Architecture for OT, IT, and AI Convergence, Validated by Practice)

In today's smart manufacturing landscape, OT, IT, and AI coexist but often remain fragmented: front-line equipment generates vast amounts of data, yet it remains trapped in isolated systems and reports; data granularity and context are lost during cross-layer transmission, forcing AI to make decisions based on "distorted contexts"; even powerful models lack a unified "interface for deployment," making it difficult to form a true closed loop. Meanwhile, security and control requirements are extremely stringent, making it impossible for AI to directly intervene in production lines at will.

A more severe challenge lies in the fact that, even for OT and IT data links, only about 23% of manufacturing enterprises have truly integrated them. When IT struggles to effectively connect with OT, the role of AI in factories becomes even more constrained.

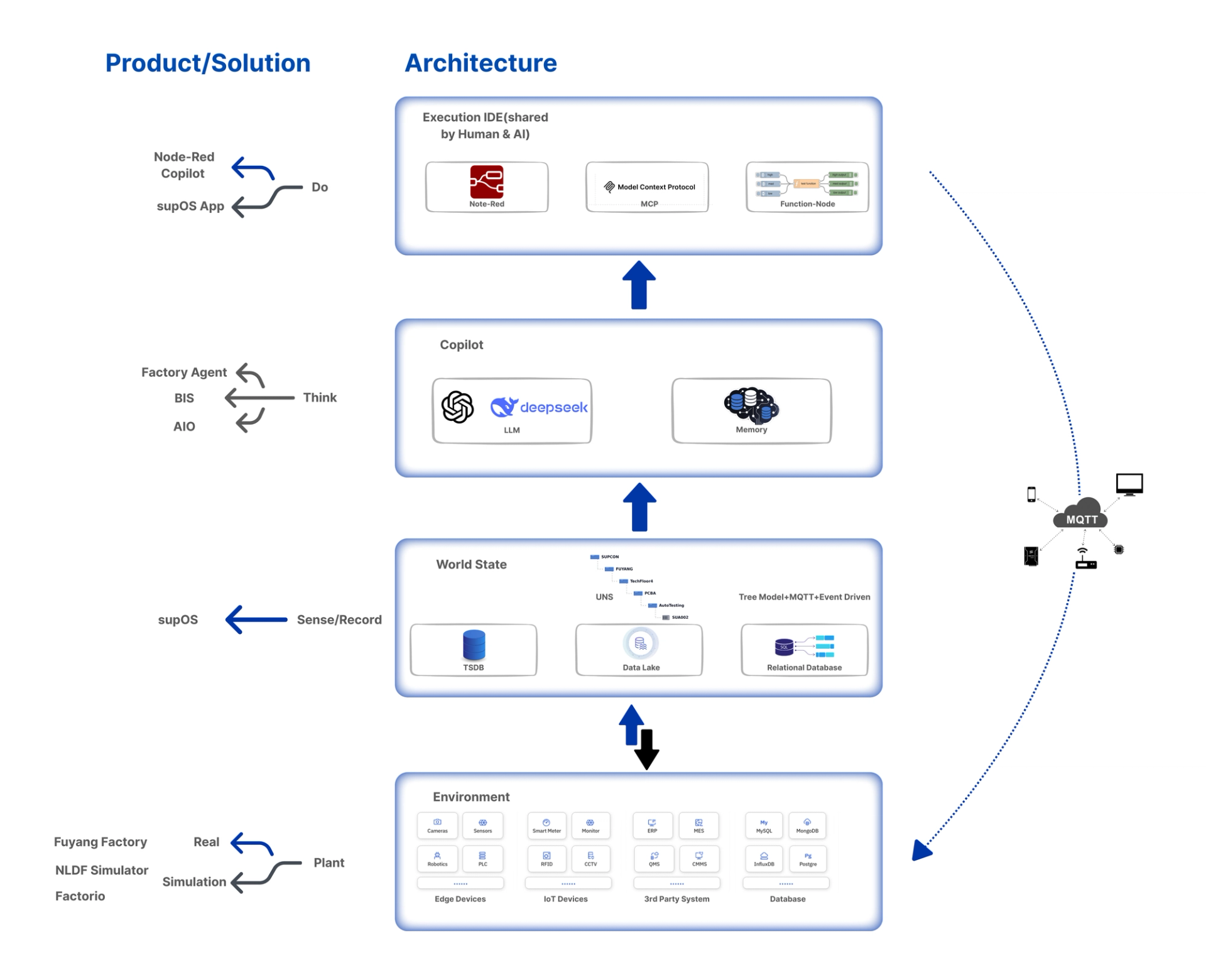

The solution is not to create yet another isolated island, but to reconstruct a three-layer closed-loop architecture that connects OT, IT, and AI:

- Sense Layer (Data): Organize fragmented data into consumable "world states";

- Think Layer (Intelligence): Enable machines to reason and plan within semantics and structures;

- Do Layer (Execution): Ensure decisions are safely and transparently implemented in the real world.

This is not merely a vision on a PPT, but prototypes and practices we have already completed in simulated factories (NLDF Simulator, Factory Agent) and real factories (Fuyang Factory).

Imagine this—

"I'm almost full here, pausing feeding."

"Received, I'll hold for a round."

This is not humans conversing, but AGVs from two production lines negotiating using language.

This is precisely the future we are exploring: a next-generation smart factory that can communicate, understand each other, and continuously self-optimize and iterate.

Theoretical Framework

Human intelligence stems from a simple yet powerful closed loop: Sense → Think → Do.

- Sense allows us to see the world, transforming external stimuli into information;

- Think allows us to assign meaning, forming judgments and plans;

- Do allows us to change the environment, and refine our cognition through feedback.

Robotics draws upon this principle, proposing the classic “Sense–Think–Act” paradigm: complex machine intelligence can be broken down into three collaborative modules—a perception module collects environmental signals, a decision module plans strategies, and an execution module performs actions. It is precisely because of this paradigm that robots have gradually moved out of laboratories and into complex real-world environments.

Industrial systems also require such a "brain-like closed loop." Without unified perception, even abundant data remains fragmented; without explainable intelligence, AI becomes a black box, difficult to trust; and without safe and controllable execution, any automation could become a potential risk.

From first principles, a trustworthy intelligent agent system must satisfy three universal laws:

1. Perception must be consumable – Data is not for accumulation or storage, but must be organized and modeled to become a "world state" that can be understood and used by intelligent agents.

2. Intelligence must be explainable – Decisions are not black-box outputs, but must be transformable from language into structured plans, with their logic and context traceable.

3. Execution must be safe and controllable – Actions are not blindly issued, but must operate within a protocolized framework with safety boundaries, supporting human-machine collaboration, rollback, and auditing.

Therefore, the three-layer philosophical framework we propose is not merely a technical architecture, but a universal abstraction for intelligent agent systems:

- Sense Layer (Data): Organizes fragmented data into consumable "world states";

- Think Layer (Intelligence): Enables machines to reason and plan within semantics and structures;

- Do Layer (Execution): Ensures decisions are safely and transparently implemented in the real world.

This is not only a factory's need but also a principle applicable to any system attempting to introduce AI into the physical world.

"Without consumable perception, there is no explainable intelligence; without controllable execution, there is no trustworthy closed loop."

Architecture Overview

We can now use an explainable, controllable, and evolvable "three-layer closed loop (Sense–Think–Do)" to connect semantic perception → decision generation → automated execution into an end-to-end industrial intelligent agent system.

Based on the design philosophy (three first principles) proposed in Section 2, the implementation will utilize a three-layer structure and an illustrative diagram that includes a practical application layer.

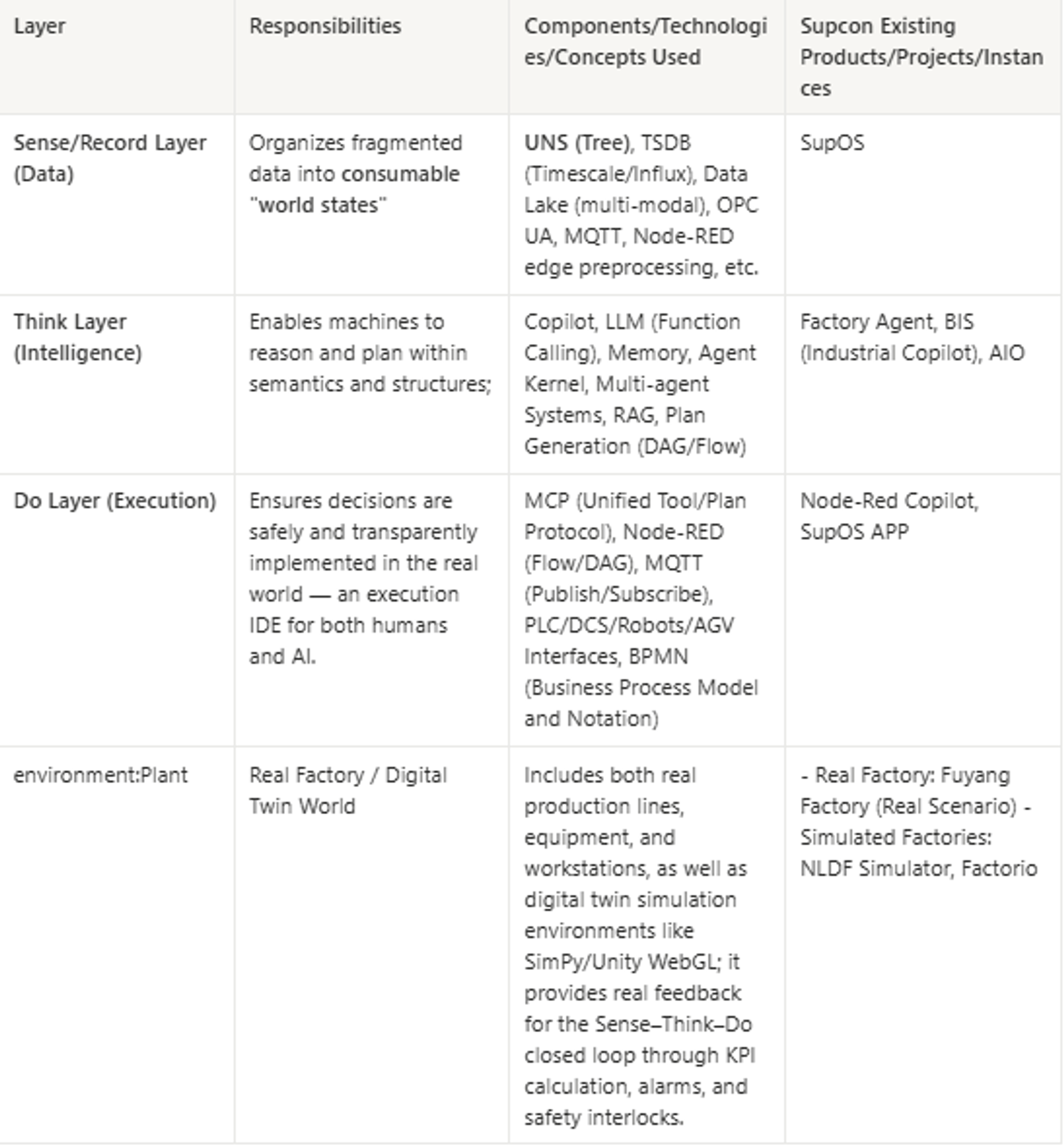

Layered Architecture Analysis + Corresponding Products for Each Layer (Overall Table)

Sense Layer: From Data Silos to Consumable World States

The Sense Layer acts as the "sensory organ" of intelligent agents, responsible for collecting, organizing, and expressing the world state, providing environmental information necessary for decision-making by the Think Layer.

Core Challenge: The Data Silo Problem

For data perception, the real challenge has never been "can data be collected," but rather how to design collection methods from a data consumption perspective. If it's just "grab a bit of data wherever it exists," it often ends up as a pile of unrelated, fragmented archives, each operating independently, ultimately forming data silos.

Such data is like raw materials haphazardly stacked in a warehouse: on the surface, it looks abundant, but it lacks unified numbering, packaging, and shelving; when you truly need to retrieve it, it's difficult to find "the right piece" quickly; more seriously, different batches may even exhibit duplication, conflicts, and semantic inconsistencies.

The result is that the hard-won collected data, instead of becoming "understandable and usable resources," turns into sunk costs.

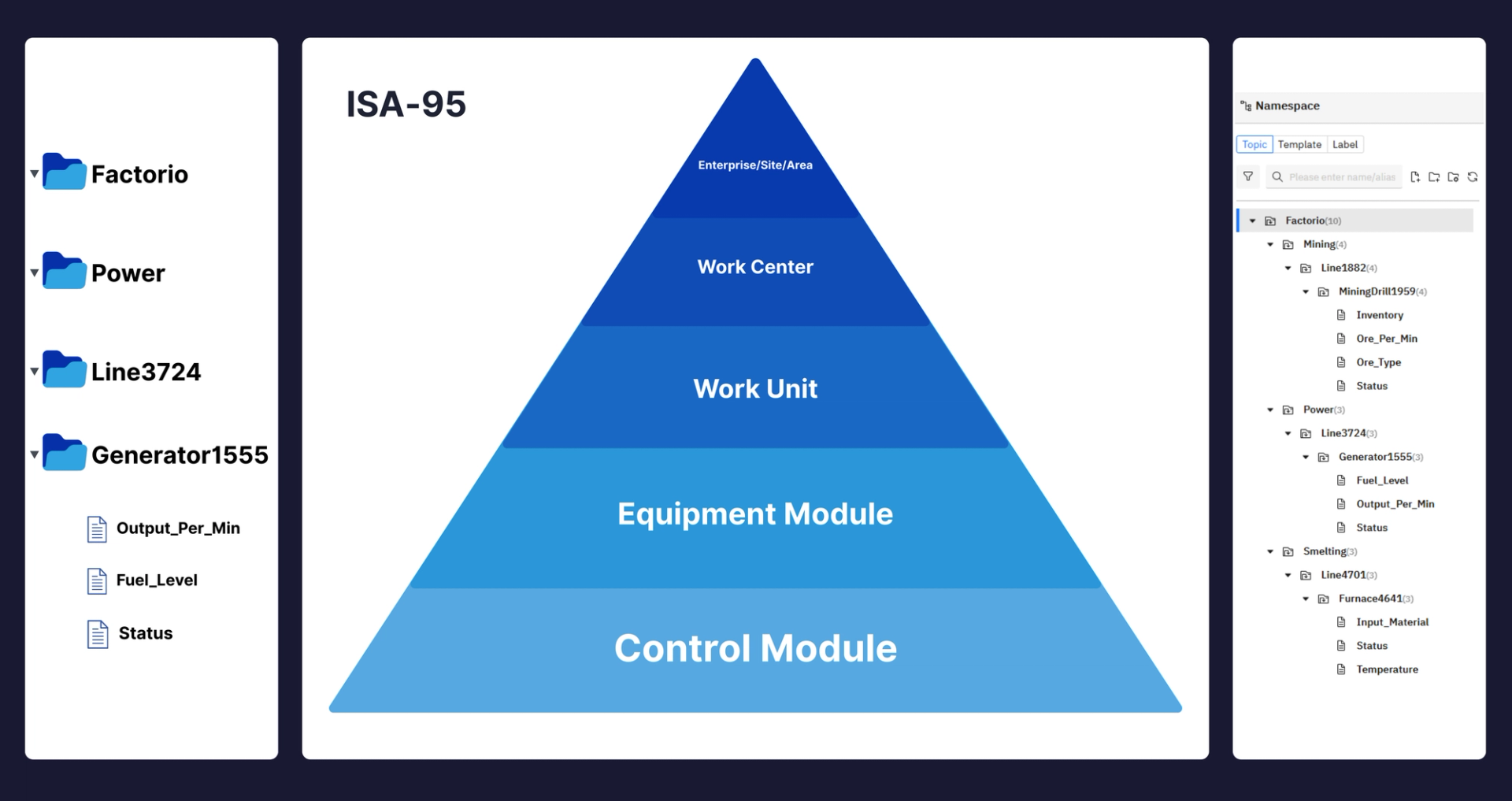

Unified Namespace (UNS) – The Semantic Backbone for Eliminating Data Silos

The introduction of the Unified Namespace (UNS) architecture in the industrial sector is the core cornerstone for eliminating data silos and achieving data interconnection. It goes beyond simple data integration by organizing all factory data with a semantic tree structure, allowing data from different sources and formats to converge into a unified model according to the enterprise architecture. UNS essentially provides a "directory service" and "semantic layer" for industrial data, metaphorically referred to as a single source of truth for real-time data. It offers an intuitive "semantic map" for LLM Agents, enabling them to apply "selective attention" and "context retention" to relevant data streams based on their roles.

UNS Technical Implementation: Semantic Organization Based on ISA-95 Model

UNS (Unified Namespace) typically employs a Tree model to construct a unified semantic data structure, organizing complex factory entities, production processes, and data points in a hierarchical and organic manner. The ISA-95 model, as an international standard for industrial automation, is a typical guiding paradigm for UNS to implement this tree model. To achieve semantic organization, the ISA-95 model is widely adopted to build hierarchical physical-spatial structures (e.g., enterprise, site, area, work cell, production line), where each equipment or production line is associated with real-time status, action data, or analytical data (e.g., OEE), facilitating navigation and inspection. The semantic organization of UNS not only significantly reduces data silos and format inconsistencies but also provides an intuitive "semantic map" for LLM Agents, enabling them to apply "selective attention" and "context retention" to relevant data streams based on their roles.

Solution: A Three-Layer Architecture of Collection → Organization → Expression

The core task of the Sense Layer is not merely data "capture," but rather designing the semantic structure, naming system, and contextual relationships of data in advance, from the perspective of how consumers will utilize it. This is crucial for ensuring that data does not become fragmented, but instead becomes the "language and memory" of the intelligent layer, supporting real decisions and actions.

Traditional industrial systems often use binary protocols or proprietary data formats. However, LLM models are more adept at processing structured, meaningful information. JSON's key-value structure highly aligns with how LLMs process relationships and meaning, greatly unleashing LLM potential by providing semantic richness, contextual relevance, and hierarchical reasoning capabilities. Meanwhile, MQTT Topics provide LLM Agents with self-describing information paths, enabling them to intelligently filter and focus on relevant data streams, realizing a shift from traditional polling mechanisms to event-driven approaches, significantly reducing information overload.

To achieve the above goals, the Sense Layer subdivides data processing into the following three core steps:

1. Collection

Gathering multi-source data from PLCs, sensors, MES/ERP, quality inspection logs, etc. The challenge lies in fragmented protocols and formats, thus standard interfaces like OPC UA, gateways, and APIs are typically used for access. In this process, edge preprocessing tools like Node-RED also play a key role.

2. Organization

Sorting raw data streams at the edge or aggregation layer, including timestamp alignment, noise filtering, and missing data imputation, as well as supplementing contextual semantics (device ID, process segment, batch number) and standardizing units and formats. This step is crucial for transforming raw data into a "world state" understandable by the intelligent layer.

3. Expression

Transforming the organized data into a "world state" consumable by the intelligent layer.

Data will be stored in these three types of databases:

- TSDB (Time-Series Database): Stores high-frequency sensor data and historical curves, supporting real-time and historical queries.

- Data Lake: Stores multi-modal data (images, videos, documents, logs), supporting offline training, historical modeling, and replay analysis. Combined with the "Cloud-Native Lightweight Factory Data Lakehouse" concept, emphasizing its lightweight and cloud-native characteristics.

- Relational Database: Stores structured business data and metadata.

SupOS Platform Practices and Case Studies

SupOS, as a data platform utilizing the UNS concept, includes the following architectural components:

- Source Flow: Built on Node-RED, acting as a pipeline connecting devices and systems, responsible for transforming real-time protocol data into JSON payloads.

- Namespace: The core of SupOS, a semantic MQTT Broker and parser based on UNS, modeling data using Topic hierarchies and structured JSON payloads.

- Sink: The storage layer, storing time-series Namespace data in Time-Series Databases like TimescaleDB, TDengine, and relational Namespace data in PostgreSQL, achieving efficient querying and compression.

- Event Flow: Coordinates Namespace data, integrating it into higher-level event/information flows, and supports merging JSON payloads and appending system-generated Prompts for LLM-powered optimization.

Actual Integration Case: Taking the integration of work order data (ERP), equipment status (PLC), and quality metrics (Excel) as an example, the SupOS platform first defines a clear structure through Namespace (e.g., Equipment/CNC1, Order/orderInfo, Quality/orderQualitylog). Subsequently, Node-RED nodes in Source Flow are used to parse, format (into JSON), and send data from REST API, Modbus PLC, and Excel files to the corresponding Namespaces. Finally, Event Flow connects real-time order and quality data to the LLM for root cause analysis, writing results to Quality/qualityAnalysis, thereby achieving full-link data integration and intelligent application.

Summary

The value of the Sense Layer is not in "moving data back," but in transforming data into an orderly, semantic, and consumable "digital world state." This is like turning disorganized raw materials into a parts library neatly arranged on shelves by category, allowing the intelligent layer to call, assemble, and reason at any time.

In short, the Sense Layer opens up the data path from OT devices and IT systems to AI analysis, giving industrial AI the "eyes" and "data network" to comprehensively perceive the factory.

Think Layer / Think

Definition: The "Think" stage is where raw data collected and processed in the "Sense" stage is transformed into actionable insights. The Think Layer is the "brain" of intelligent agents, responsible for understanding intent, generating solutions, and planning decisions. After the Sense Layer provides environmental status or user intent, the Think Layer analyzes and reasons about the input information, determines the goal the user or system wants to achieve, and generates corresponding solutions. These solutions are typically "structured," with the Think Layer translating natural language requirements into executable structured plans, such as flowcharts, function/tool call sequences, or control logic code. This is equivalent to preparing a "blueprint for action" for the Execution Layer.

Core Challenges and Agent Opportunities

Traditional industrial decision-making relies heavily on empirical intuition and passive software systems. In an era of labor shortages and rapid change, this model is not only unsustainable but also reveals its inherent limitations in handling massive amounts of information and complex dynamic environments. Therefore, the future of industrial AI goes beyond merely RAG (Retrieval Augmented Generation), chatbots, or passive question-answering; it requires closed-loop Agent systems capable of proactively making decisions, executing commands, and being responsible for outcomes. This shift enables artificial intelligence to truly bridge the gap between "thinking" and "acting," realizing a deep convergence of intelligence and execution.

Factorio: The Ideal Testbed for Intelligent Agents

To explore this possibility, we introduced Factorio—a "controlled yet complex, physically consistent industrial simulation environment." It is not just a game; it is the ideal "digital sandbox" for industrial LLM Agents. Here, every decision made by an Agent receives physical feedback just like in a real factory, but without incurring the high costs and risks of the real world. Factorio, as a unique industrial-grade digital twin testing platform, allows researchers and developers to, without physical hardware constraints:

- Deeply test industrial LLM systems in physically consistent complex environments: It provides a highly realistic factory microcosm, simulating real industrial elements such as resources, production chains, and logistics, enabling LLM Agents to learn and validate in complexity close to real-world scenarios.

- Visualize Agent decisions and their consequences in real-time, achieving "what is thought is seen": Every operation of the Agent (e.g., building, dismantling, configuring equipment, optimizing layout) can be instantly displayed on the visual interface, greatly enhancing the transparency and interpretability of the decision-making process.

- Experiment with various automation strategies at low cost and high efficiency: Compared to the trial-and-error costs in real factories, Factorio offers infinite experimental space, allowing Agents to boldly try, rapidly iterate, and accelerate the validation and optimization of innovative strategies.

- Validate AI's planning and optimization capabilities against established industrial mechanisms, accelerating model deployment: It provides a strict feedback loop for AI models' planning, scheduling, and optimization, ensuring that Agents can not only "think" but also translate their thoughts into effective and measurable physical actions, significantly accelerating the research and development cycle of industrial AI applications.

This "natural laboratory" approach significantly accelerates the research cycle for industrial AI applications, allowing us to focus on agent intelligence itself.

To fully unleash the intelligent potential of Agents and enable them to tackle more complex industrial challenges, we are further focusing on their capabilities in multi-agent collaboration and powerful tool calling.

Multi-Agent Collaboration and Tool Calling

Industrial systems often require multiple specialized intelligent agents to work collaboratively. The combination of JSON and MQTT, through semantic message exchange, enables transparent multi-agent collaboration. For example, in a crisis scenario in Factorio, pollution, production, and resource Agents can perform independent analysis by subscribing to their respective relevant Topic patterns (e.g., factorio/pollution/#, factorio/production/#), and publish structured JSON decisions via Topics like factorio/coordination/crisis_response to achieve coordinated responses. For instance, if a furnace fails, Agents can coordinate to activate backup production lines, deploy efficiency modules, and adjust resource allocation. This mechanism achieves semantic transparency and temporal flexibility, allowing complex system-level optimizations to emerge from shared contextual information among specialized Agents.

Furthermore, to enhance decision-making capabilities, the Think Layer will also possess powerful Tool Access capabilities, connecting to numerical solvers, machine learning models, external APIs, etc., to dynamically perform operations such as querying databases and calculating schedules. For example, OpenAI's function calling feature, combined with the open-source LangChain framework, allows LLMs to call factory databases or MES systems as needed, achieving autonomous decision-making and action. To enhance decision trustworthiness, Chain-of-Thought reasoning is used to enable models to reason step-by-step explicitly, thereby generating explainable decision processes.

Project and Practice Examples: In this domain, we have undertaken projects such as Factory Agent, simulated factory practices, Node-RED Copilot, and BIS (Business Intelligence Assistant) as an industrial Copilot. Among them, BIS can automatically generate MES (Manufacturing Execution System) business processes based on user-input PRDs (Product Requirement Documents) and provide intelligent insights and optimization suggestions, significantly improving the design and development efficiency of MES systems.

Execution Layer / Do

It can also be understood as an AI-human collaborative "Execution IDE." The Execution Layer is the "hands and feet" of intelligent agents, whose role is to translate structured language decisions output by the Think Layer into real-world control behaviors. When the Think Layer makes a decision (e for example, suggesting to adjust parameters of a machine or schedule a batch of logistics transportation), the Execution Layer is responsible for issuing commands and implementing actions, driving actual equipment, software or personnel to complete the decision, thereby closing the Sense-Think-Do loop. The core of the Execution Layer is to map abstract digital solutions to specific physical/digital actions. For example, a "reduce temperature to increase output" plan generated by the intelligent layer might require the execution layer to call the industrial control system API to reduce the heating furnace temperature by 5°C, or issue instructions through the scheduling system for an AGV to deliver raw materials in advance.

Model Context Protocol (MCP) and Node-RED Flow Engine

To reliably execute instructions in complex, heterogeneous environments, the Execution Layer introduces the Unified Calling Protocol (MCP, Model Context Protocol) as a standard interface. The MCP protocol provides a common language for intelligent agent execution; it encapsulates command message formats, contextual information, and execution plans, ensuring that different subsystems can understand and correctly respond to agent instructions. In short, MCP enables intelligent agents to collaborate with various systems/devices at a high-level conversational style, similar to how humans collaborate, thereby avoiding the inflexibility of traditional rigid API calls. Its enhanced key features include:

- Retrieving and updating Node-RED flows via MCP.

- Providing a multi-version flow backup system with integrity validation.

- Adding detailed argument descriptions for tools, better serving LLMs and handling complex tasks.

- Obtaining available node information (name, help, module name), rather than raw code.

- Installing new node modules via LLM, and managing module and node states.

- Supporting management of tabs, individual nodes, searching nodes, accessing settings and runtime status, and remotely triggering inject nodes.

Model Context Protocol (MCP) Tools

MCP tools are further subdivided into Flow Tools (managing flows), Node Tools (managing nodes), Backup Tools (backup management), Settings Tools (system settings), and Utility Tools (utility tools), providing comprehensive Node-RED control capabilities.

supOS MCP Experimental Features: To further enhance interaction with LLMs, supOS provides experimental MCP functionalities. It allows LLMs to access and interpret real-time UNS content via built-in MCP Server and Client. Supported tools include get-model-topic-tree (get Topic tree structure), get-model-topic-detail (get Topic metadata), get-topic-realtime-data (get real-time data for specified Topic), and get-all-topic-realtime-data (get real-time data for all related Topics). These tools greatly expand LLMs' perception and interaction capabilities on the SupOS platform, enabling the construction of more intelligent Agents.

If you are interested in how LLM Agents can deeply interact with industrial systems like Node-RED, we invite you to visit our open-source node-red-mcp-server GitHub repository (https://github.com/supcon-international/node-red-mcp-server) to personally experience this powerful industrial AI interaction interface.

Node-RED Flow Engine

A crucial component in the Execution Layer architecture is the Node-RED flow engine. Node-RED orchestrates execution logic through visual data flows, making it highly suitable for rapid setup of multi-step control processes in industrial settings. By developing specialized plugin nodes for Node-RED, the Agent's decision structure (such as flowcharts generated by the Copilot mentioned earlier) can be directly injected into Node-RED for execution. Node-RED then handles interaction with underlying devices and services, realizing low-code process execution. In this way, the decisions output by the intelligent layer become a series of nodes in Node-RED (e.g., sensor reading, judgment, execution action), which are then truly "run" in the execution layer. Flow here refers to the specific execution workflow structure, which can be a Node-RED node flow, a BPMN (Business Process Model and Notation) process, or a custom script sequence.

Node-RED Dev Copilot: An Execution IDE for Humans and AI

Node-RED Dev Copilot is an innovative application of the MCP protocol in the Execution Layer. It is an AI programming assistant sidebar plugin that provides powerful AI assistance for Node-RED development. Its core functionalities include:

- Multi-platform AI support: Compatible with OpenAI, Google Gemini, DeepSeek, and custom OpenAI-compatible APIs.

- Real-time streaming (SSE): Provides immediate feedback through streaming responses.

- MCP protocol integration: Automatically discovers and calls MCP tools, enabling powerful AI assistance in Node-RED development.

- Intelligent tool calling: AI automatically selects and executes relevant tools based on requirements.

- Sidebar interface and multi-node switching: Can be used directly within the Node-RED editor, allowing free switching of LLM nodes.

- Secure storage and session history: Securely stores API keys through Node-RED's credential management mechanism and supports multi-turn conversation history.

Through Node-RED Dev Copilot, the Execution Layer truly becomes an "Execution IDE" simultaneously for human developers and AI, greatly enhancing the development efficiency and intelligence level of automated processes.

If you are interested in how Node-RED Dev Copilot assists AI programming, we invite you to visit our open-source GitHub repository (https://github.com/supcon-international/node-red-dev-copilot) to personally experience this Execution IDE for humans and AI.

MQTT Communication and Command Standardization

The Execution Layer interfaces with various southbound protocols and interfaces, such as PLC commands, REST APIs, database operations, robot control instructions, etc., mapping each step in the Flow to actual actions. To ensure real-time and reliable delivery of these actions, the Execution Layer generally employs lightweight messaging buses like MQTT as the control channel, combining a publish/subscribe model to achieve instant broadcast of commands and status feedback.

In the communication between Agents and the environment in the simulated factory, the MQTT Topic architecture and JSON message format are highly standardized. For example, commands sent by Agents must be in the following JSON format:

{

"command_id": "str (Optional field, used for recording decision process)",

"action": "str (Must be one of the supported actions)",

"target": "str (Target device ID for the action, optional)",

"params": {

"key1": "value1", ...

}

}And the response messages from the system are as follows:

{

"timestamp": "float (Simulation timestamp)",

"command_id": "str (From the player's command_id)",

"response": "str (Feedback information)"

}

Supported action commands include move (AGV movement), charge (AGV charging), unload (AGV unloading), load (AGV loading), and get_result (get KPI results). This standardization ensures clear, controllable, and traceable commands.

Practical Scenarios and Design Considerations

In the Execution Layer, we focus on how to safely and efficiently implement AI decisions as on-site actions. For example: AGV scheduling system: After the intelligent layer determines the delivery sequence for multiple AGVs, the execution layer needs to issue tasks to each AGV and supervise their completion. We have developed a Node-RED AGV control plugin that can communicate with the AGV's dispatch controller, automatically translating the intelligent layer's scheduling plan into specific AGV route instructions, which are then published via MQTT to achieve centralized dispatch of the AGV fleet. In production process control, the execution layer sends optimized parameters to the DCS control system via the MCP protocol and monitors feedback to confirm that the parameters have been applied. When industrial robots or production line equipment are involved, the execution layer often also needs to consider safety interlocks and manual intervention mechanisms.

In real industrial control, a common practice is to introduce a "double confirmation" mechanism to prevent erroneous operations. Although this feature is not included in the current simulated factory Agent project, it will be considered in the execution layer design for real deployments in the future to ensure safety: agent decisions are first sent to the human-machine interface for review, where operators have the right to reject or adjust within a certain time window, before being finally issued to the equipment by the execution layer. This reflects the safety requirements of industrial closed-loop control. Furthermore, the execution layer also needs to handle asynchronicity and real-time performance: industrial actions may have delays and concurrency; for example, controlling a batch of equipment requires synchronous coordination. Our solution is to combine state machines in Node-RED flows to manage multi-step instructions sequentially and concurrently, and to use MCP's context tracking capabilities to ensure that every action has a corresponding response and is not lost. Additionally, through simulation, we have also verified the effectiveness of the execution layer: by having intelligent agents issue commands in a digital twin environment and observing virtual equipment responses, we optimize the execution logic before deploying it to real production. This attempt at simulated Agents effectively reduces deployment risks. In summary, the execution layer transforms the "theoretical discussions" of the intelligent layer into "real-world action," with its unified protocol and flow engine ensuring consistent command and scheduling of various equipment actions, and allowing humans to transparently see what AI is doing at each step, enhancing the system's interpretability and controllability.

05|Actual Application Scenarios & Case Studies

1. SUPCON NLDF (Natural Language Driven Factory) Simulator

SUPCON NLDF Simulator: The "Stanford Town" of Industrial Agents – A Growable, Quantifiable, Open Industrial Benchmark Simulator

SUPCON NLDF Simulator is a highly configurable discrete factory simulation environment implemented in SimPy. It not only simulates complex real-world advanced scheduling and resource allocation problems but also serves as an open benchmark platform for Agent technology to explore collaboration possibilities and continuous evolution in the industrial domain.

In this digital industrial world, akin to a "Stanford Town," we have built a dynamic ecosystem comprising 3 production lines, a raw material warehouse, and a final product warehouse. Each production line is meticulously equipped with A, B, and C workstations and a quality inspection station, all efficiently connected by intelligent conveyor belts and an AGV network. Players (or intelligent agent developers) need to operate a total of 6 AGVs, coordinating production and optimizing processes while flexibly responding to various random failures and emergencies, striving for the highest possible comprehensive KPI score.

Core Design Philosophy: External JSON Interface, Internal Natural Language Driven, Achieving Infinite Scalability. Communication between the simulation environment and player Agents occurs via standardized JSON messages. We require players to build robust natural language processing capabilities within their Agents, forming a complete JSON -> NL -> JSON decision loop. This not only enables machines to understand each other, collaborate, adapt, and make autonomous decisions through language, much like humans, but more critically, it creates a growable, iterable, and open arena for global players and researchers to continuously contribute to and upgrade. The NLDF Simulator aims to become a Dynamic Industrial Intelligence Benchmark, constantly pushing the decision-making and coordination capabilities of industrial AI Agents in complex real-world environments to new heights by introducing new challenges, more sophisticated industrial scenarios, and advanced evaluation mechanisms. Players can continuously optimize their Agents, share strategies, and collectively build a continuously evolving industrial intelligence ecosystem.

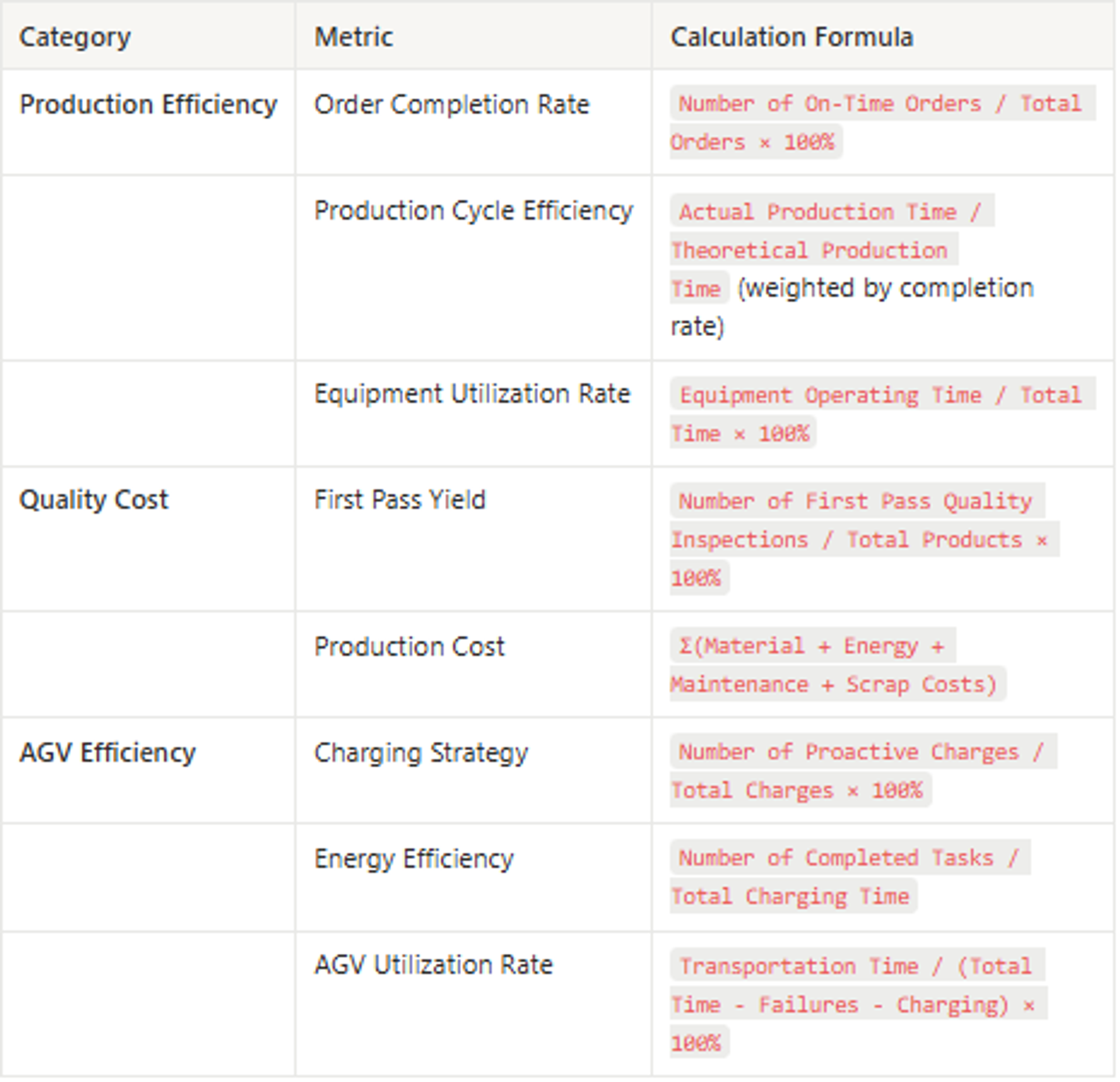

KPI Metrics and Scoring System:

The scoring system uses a 100-point scale, with Production Efficiency (40 points), Quality Cost (30 points), and AGV Efficiency (30 points) each contributing a corresponding weight. This makes NLDF Simulator a benchmark for quantitatively evaluating LLM Agent's decision-making and coordination capabilities in complex industrial environments.

We have open-sourced the code for this part, and we welcome everyone to visit the GitHub repository (https://github.com/supcon-international/25-AdventureX-SUPCON-Hackathon) to personally experience this "Roosevelt Town" in the industrial domain and feel the grand mystery of the industrial world.

2. Factory Agent + Factorio: Visualized Practice of Intelligent Control

Factorio, as a "controlled yet complex, physically consistent industrial simulation environment," serves as our ideal testbed for research into LLM Agent intelligent control. By introducing LLM Agents into Factorio, we can real-time "visualize Agent decisions and their outcomes" and experiment with various automation strategies without the constraints of physical hardware. Factory Agent "perceives, reasons, acts, and learns" in this environment, demonstrating the practical control capabilities of intelligent agents.

Here are three intelligent control scenarios in Factorio and their key insights:

2.1 Scenario 1: Optimizing Solar Power Efficiency

Goal: Maximize power output per unit area within a limited space and ensure stable 24/7 power supply.

The Agent, through iterative spatial reasoning and feedback analysis, autonomously discovered the theoretically optimal 1.18:1 solar-to-accumulator ratio. Its reasoning process included an initial analysis of "space availability, inefficient panel placement, and insufficient nighttime power"; proposing the hypothesis of "accumulator shortage and poor layout"; and formulating the strategy of "reorganizing the layout, testing ratios, and monitoring stability." This result highly aligns with expert human designs achieved after long periods of trial and error, proving that LLM Agents can achieve industrial-grade layout optimization through language-driven intelligence.

2.2 Scenario 2: Efficient Copper Mining Operations

Goal: Maximize copper ore collection per minute within a fixed area and power budget.

The Agent not only increased the number of drills but also reorganized the entire mining layout through spatial reasoning, throughput optimization, and power management. Its reasoning process was based on initial observations of "disorganized drill layout, clogged output belts, and inconsistent substation coverage"; proposing the hypothesis of "optimizing drill spacing, simplifying conveyor belt routing, and strategically placing substations"; and formulating the strategy of "uniform grid layout, aligned output belts, and staggered substation placement." It achieved a uniform grid layout for drills, centralized routing of output conveyor belts, and strategic placement of substations, increasing ore output by 52% and achieving over 98% drill uptime. This demonstrates LLM Agent's ability to reconstruct and optimize industrial processes in multiple dimensions.

2.3 Scenario 3: Balancing Efficiency with Sustainability

Goal: Maintain high production output while keeping pollution levels below 500 ppm to avoid environmental penalties.

In this scenario, the Agent demonstrated adaptive trade-off reasoning capability. It didn't just optimize a single metric but balanced competing goals of production efficiency and environmental sustainability. Its decision-making process involved observations of the "high output, high pollution" initial state; based on the hypothesis that "production and pollution need to be balanced"; and formulating the strategy of "adjusting module combinations and switching energy sources." By combining modules (efficiency modules + productivity modules) and selecting energy sources (solar + backup coal), the Agent successfully controlled pollution levels below the threshold while maintaining high output. This shows that LLM Agents can negotiate and balance multiple objectives, providing feasible and explainable solutions.

These Factorio practice cases strongly demonstrate the powerful potential of LLM Agents for autonomous decision-making, layout optimization, and multi-objective trade-offs in complex industrial scenarios.

We have also open-sourced the relevant code for Factory Agent in Factorio, and we welcome everyone to visit the GitHub repository (https://github.com/supcon-international/Factorio-Agent) to personally experience this game-like industrial world.

3. Supcon Fuyang Smart Factory: Real-World Agent Deployment

Supcon Fuyang Smart Factory is a real-world deployment case of the Sense-Think-Do full-process architecture. The World Economic Forum points out that real-time AI Agents can increase productivity by 14% and reduce decision latency by 80%—a transformation being realized at the Fuyang Factory. Here, multiple Factory Agents have been deployed to enhance the operational responsiveness and intelligence level of the production line.

- Agent 1: AGV Intelligent Scheduling. This Agent autonomously schedules AGVs by interpreting real-time equipment status events, achieving intelligent and efficient material transportation.

- Agent 2: OEE Real-time Monitoring and Optimization. This Agent continuously monitors OEE (Overall Equipment Effectiveness) performance, identifies inefficient links, and immediately recommends optimization plans, thereby transforming raw machine data into actionable insights, helping engineers respond faster, improve throughput, and reduce manual workload.

The practices at Fuyang Factory, including achievements like predictive maintenance and traceable quality charts, fully demonstrate how Factory Agents can turn the vision of intelligent, data-driven operations into reality in a real industrial environment, serving as a model for deep integration of OT, IT, and AI. Furthermore, the factory is exploring the use of reinforcement learning to adjust parameters further enhancing the self-adaptive optimization capabilities of the production line.

Fuyang Factory is a truly operational automated factory, and we sincerely invite friends from all walks of life to visit and witness the real-world implementation and operation of these industrial miracles.

Looking Ahead

The factory is no longer just a "collection of machines" executing instructions.

It is a "living system" with the ability to perceive, judge, and make decisions.

We are building the "brain + nervous system" + "hands and feet" of future industrial systems.

Imagine a factory where machines not only predict failures but also respond autonomously. Artificial intelligence can detect motor aging, cross-reference spare part availability in the ERP, generate purchase orders, and arrange for technicians to service—all before a failure occurs. This shift from passive to proactive, coupled with AI-driven automation, is the foundation of the future factory, enabling industrial systems to operate with minimal human intervention and maximum efficiency.

This is precisely the value of the Sense-Think-Do three-layer architecture we have built and continuously iterate on, addressing current industrial pain points. It is not merely a theoretical concept, but also backed by a series of products and cases that have been put into practice and are currently being explored.

Some content has been disabled in this document